How to kickstart AI projects in government — lessons from Border Force, HMRC and GIAA

Artificial Intelligence (AI) is on everyone’s lips. In government, there’s an urgency to do something with AI, driven by the allure that it’s a “must have” tech. Yet, despite the enthusiasm and strategic ambition, many civil servants grapple with where to start and how to ensure projects deliver value.

A recent webinar, A Civil Servant’s Guide to Getting AI Projects off the Ground, hosted by Public Technology and Zaizi, featured digital leaders from the Border Force, HM Revenue & Customs (HMRC), Government Internal Audit Agency (GIAA), and Zaizi, who shared practical advice on how to get AI projects off the ground.

Watch webinar: A civil servant’s guide to getting AI projects off the ground

Where to begin? The big question

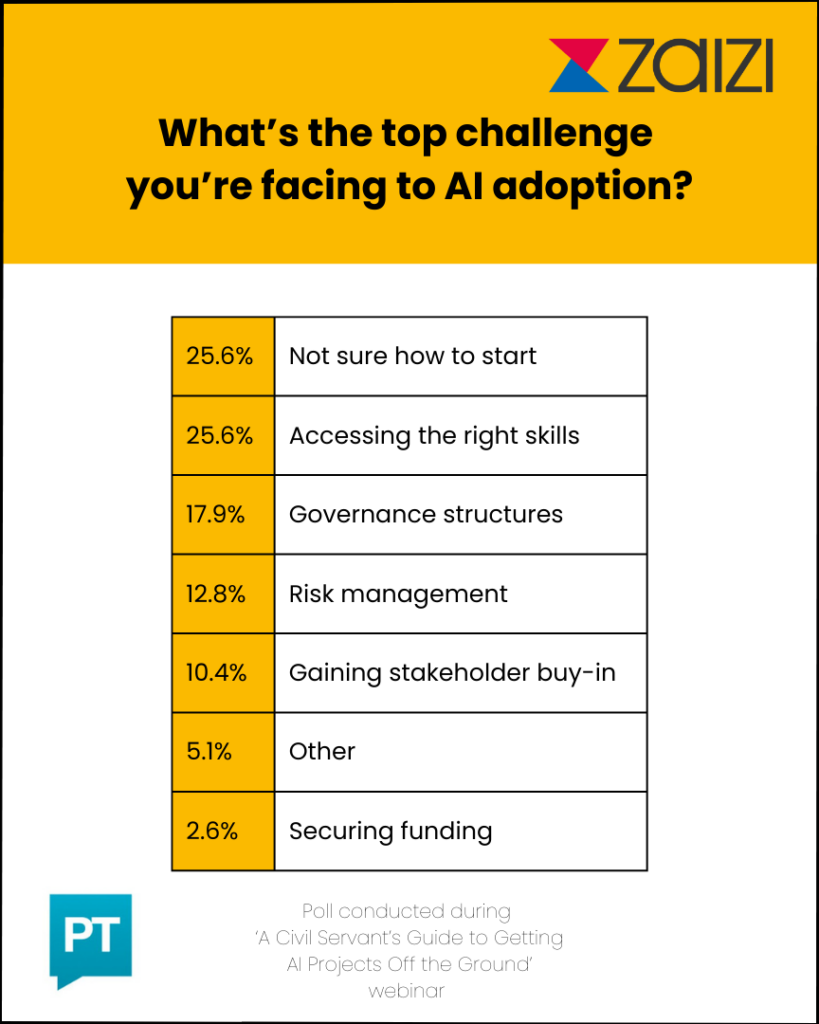

A live poll during the session highlighted a telling insight: most attendees were unsure how to start their AI journey.

While many organisations want to harness AI’s potential, the transition from vision to reality is fraught with barriers— chief among them accessing the right skills, gaining stakeholder buy-in, risk management, and navigating governance structures.

Notably, funding wasn’t cited as a significant barrier, signalling that the issue lies in execution rather than ambition.

Show value: Map AI to organisational goals and user needs

Chijioke (Chino) Nwachukwu, previously Assistant Director of Border Transformation at Border Force and now a Senior Programme Manager at the UK Health Security Agency, stressed the importance of showing senior leaders how AI initiatives align with organisational goals.

Communicating the “benefits and the value to the operational teams and the organisational goals are key,” he said, emphasising the need to get frontline users alongside senior leadership in those early discussions.

He added that showing senior leaders how any solution will deliver operational efficiency and/or drive value for money is important.

Dr Lauren Petri, Head of Data Analytics at the Government Internal Audit Agency, echoed the need to align user needs with organisational objectives. “I think that was really interesting in the poll – the most common issue people were facing was how to get started,” she said.

“Certainly, for us, one of the key things was understanding the challenges for users and putting that in the context of the organisation’s objectives and identifying things that will make a difference to them.”

By creating ‘wrappers’ around existing GPT models, GIAA is developing self-service tools for specific tasks that align with organisational goals.

Lauren spoke about how her small team developed internal tools like the Risk Engine and Insights Engine to drive productivity. For example, the Risk Engine uses AI to identify risks in seconds, something that used to take teams “three hours in a workshop.”

Break silos: Get stakeholder alignment and buy-in

Clayton Smith from Zaizi underscored the importance of aligning leaders and other stakeholders by taking them on a journey of what’s possible.

“When we talk about AI projects in government, we are not just talking about delivery teams or project teams or digital, data or technology teams,” he said, emphasising the impact of having multidisciplinary teams. “It’s often cross-cutting — operations, strategy, data leads, digital colleagues, technology colleagues. That alignment and that collaboration early on is extremely important. It helps with risk mitigation. It helps with delivering value. And it also helps with governance.

“As a supplier, part of our role is to bring all of those bodies and that thinking together,” he added, pointing to Zaizi’s Transformation Day approach as proof that “you don’t need to take 12 months to get AI projects off the ground.”

“The sooner we can do that, the sooner you identify problems,” he added. “You find a way to deliver solutions more quickly. And it helps to build that trust in terms of building that business case, securing funding and getting projects off the ground successfully.”

Fail fast to succeed: Test and iterate

Clayton also pointed to the importance of having a “failing fast” mentality. “Not every AI project in government will be successful,” he said – but it will enable quick learning and iteration.

This view is shared by Lauren: “You do need some freedom in that kind of exploratory mode to be able to try things out,” she said. “Just echoing what Clayton said about being comfortable in failing fast in what you’re doing. Build these proof of concepts really quickly. Let’s test them out. If they don’t work, move on to the next one. If you’ve got that kind of bank of ideas and priorities, you can just keep working through those.”

Lauren also made the point about alignment and clearly understanding your goals before drafting a business case. She warned organisations often draft business cases too early, before fully understanding what they want to achieve.

“If you do it too early in a project — before you’ve actually understood exactly what you’re trying to do — you’re almost less likely to want to give up because you’ve already put in all the hard work.”

Transformation Day: Talk to us to find out how you can align stakeholders and develop a business case for your AI solution

Doing it right: Lessons from Border Force

Border Force’s AI journey demonstrates how to launch impactful projects efficiently. Confronted with the growing complexity of managing the flow of people and goods across UK borders, Border Force explored how AI and machine learning could improve efficiencies. They set an ambitious challenge: deliver a proof of concept in just 12 weeks.

Chino shared insights from the successful AI pilot during the webinar. The team had valuable data that could be used to develop predictive models to drive efficiencies and reduce the risks associated with manual work. Having identified specific use cases, Chino said they sought the help of digital and AI specialists, including Zaizi.

“They looked at the data sets and were able to advise on which of these use cases they could satisfy. And then we set about beginning to address those use cases and develop models that allowed us to undertake predictions,” he said.

Clayton said Zaizi brought the right skills and perspectives to the project, combining deep knowledge of government and industry to collaborate closely with Border Force and other suppliers.

“We thought about the outcomes that Chino wanted to deliver, what border security wanted to get delivered, but also focusing on the users,” added Clayton.

“This project has delivered enormous value. And I know Border Force are extremely excited about the potential.”

The pilot project yielded impressive results. The team is now trialling the solution in an operational context, allowing them to gather more data and progressively enhance the models.

The approach demonstrates the benefits of a careful, iterative development method, focusing on refinement and practical testing before full-scale deployment.

Governance tightrope: Lessons from HMRC

As one of the largest government departments, HMRC balances innovation with a strong governance framework.

Anna Kwiatkowska, Deputy Director for Data Science at HMRC, explained that HMRC uses a two-pronged approach to AI governance: project-level governance to manage risks and benefits and centralised governance that keeps oversight of the AI projects in the department.

Anna also addressed using AI in relation to data protection and how HMRC evaluates AI models against their assurance framework to detect and manage any biases.

ChatGPT’s launch turbocharged the department’s interest in AI, and she noted a surge of interest in using it to address business challenges.

“We created governance; how we would prioritise those use cases,” she said. “But whatever we choose, it is still focused on the delivery of three main things; closing the tax gap, modernising how we serve customers and improving productivity.”

Anna explained that HMRC’s use of AI is mainly for supportive tasks: “Advising people, selecting text for people, providing summaries, helping navigate the world – rather than doing any automated decisions.”

“And that is still within the safeguards in place, the security in place, the ethics in place, everything that underpins trust,” she added.

So the final takeaways?

For organisations eager to demonstrate quick wins, the main advice was clear: solve specific operational problems that directly support your organisation’s goals. “Focus on solving actual business problems that bring value rather than explore shiny tech,” said Anna.

The panellists agreed on a measured approach to AI adoption. “Start small, prototype, iterate and then scale up,” Chino advised.

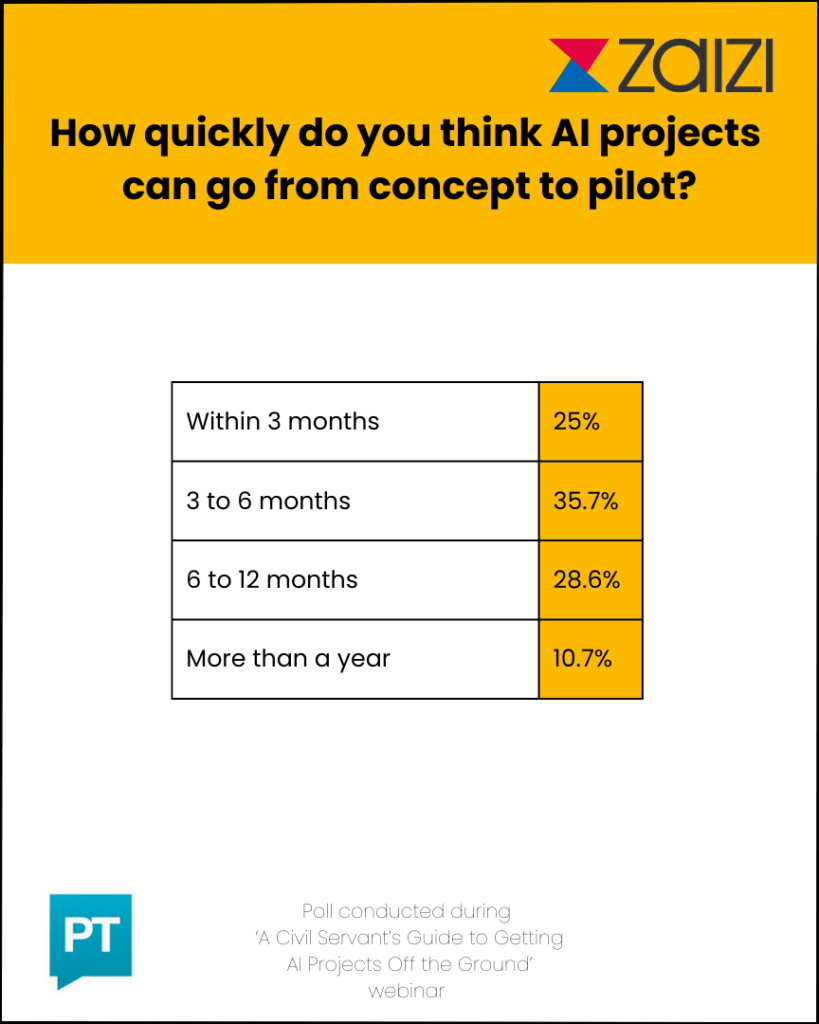

Border Force’s AI project showed that well-executed initiatives can quickly deliver value — a view shared by 60% of the attendees who felt AI projects can go from concept to pilot within half a year.

Chino also said that determining the business objectives and solving well-defined problems is essential to securing stakeholder support and governance. He recommended identifying processes that are repetitive, error-prone, and take a long time to do.

Clayton added that government organisations should also seek external expertise to introduce diverse viewpoints and have the right skills to drive innovative solutions.

And Lauren highlighted the human element and why winning hearts and minds early is crucial: “Bring them on the journey and actually make sure you’re supporting them with the tools that you’re building.”

Watch the webinar now and if you have any questions about the event please feel free to reach out.

Latest blogs

-

Zaizi founder Aingaran Pillai named chair of techUK’s Security and Public Safety SME Forum

-

Zaizi ‘Highly Commended’ for Best Employer at 2025 Computing Women in Tech Excellence Awards

-

Modernising government for AI: What key challenges do departments face?

-

PICTFOR roundtable: Is regulation a handbrake or a catalyst to public trust and innovation in AI?

-

Beyond blueprints: Making government transformation real

-

How CyberFirst interns shaped Zaizi’s AI training programme